Unsecured Server Exposure Reveals Billions of Credentials: The Urgent Impact of a Massive Elastic Database Leak

➤Summary

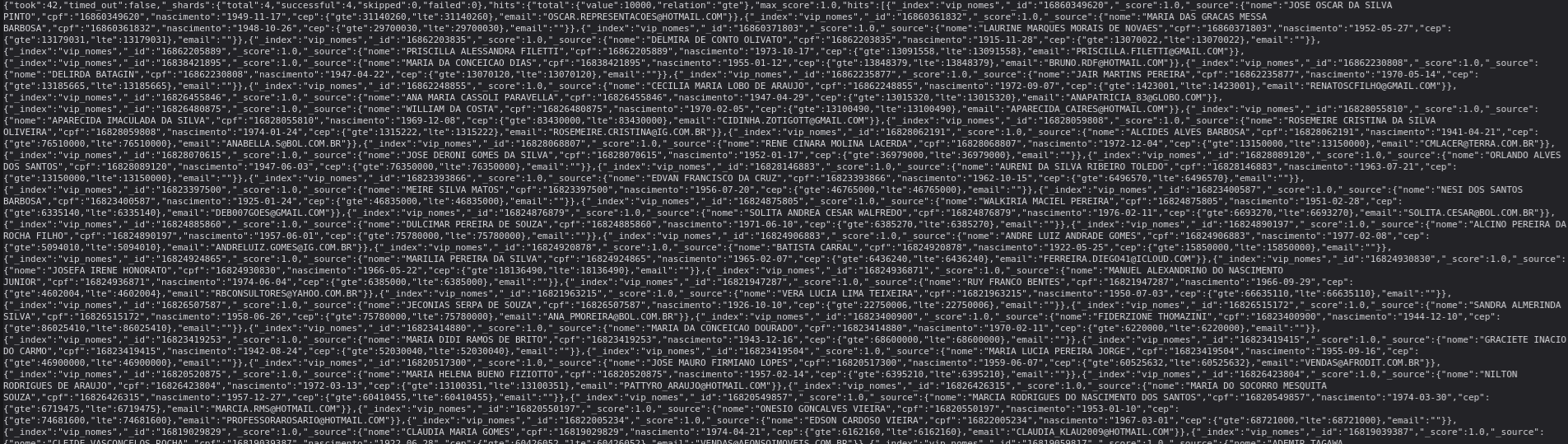

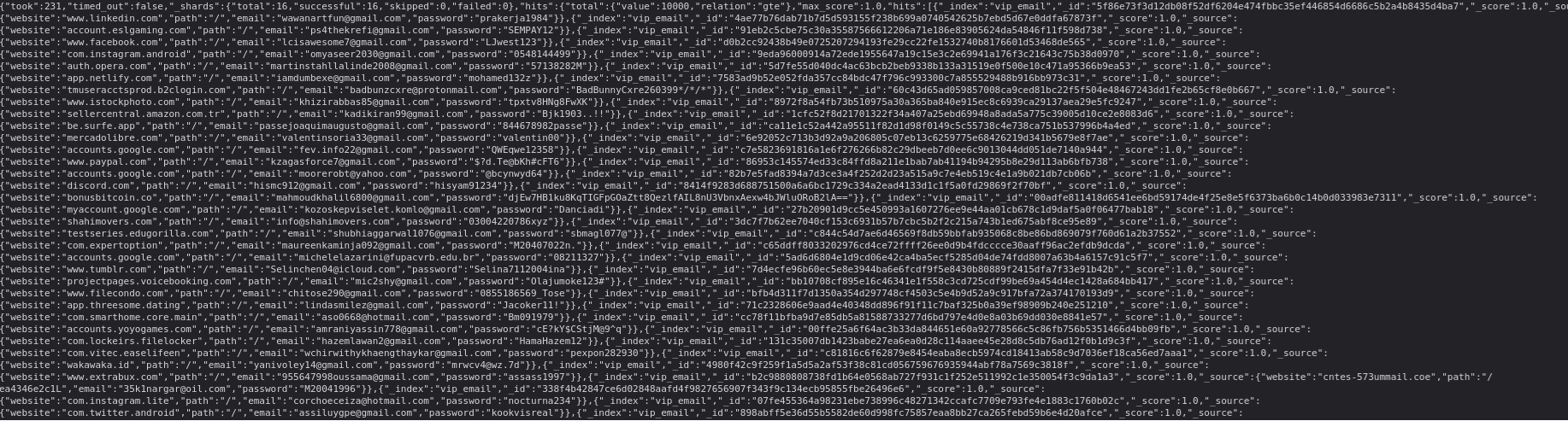

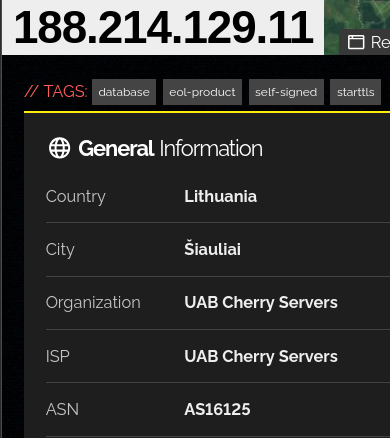

In a critical breach that underscores how vulnerable modern data stores have become, a publicly reachable instance of Elasticsearch (hosted at IP 188.214.129.11, assigned to UAB Cherry Servers) has reportedly exposed approximately 1,710,059,583 documents across a dataset of about 774.43 GB. This exposure includes Compromised fields: username, url, email, plain-text password, CPF, CEP, full name, date of birth (DOB). The severity of this unsecured server leak cannot be overstated—both from the perspective of individual users and organizations responsible for safeguarding credentials and personal data. In this article we’ll examine the breach, parse the risks, review how such exposures occur, and outline a checklist of practical mitigation steps. 🔍

What exactly happened with the unsecured data leak?

The incident begins with monitoring by the team at Kaduu, who discovered the unsecured Elasticsearch instance during dark-web/deep-web scanning. The server details: IP 188.214.129.11; size approx. 774.43 GB; document count roughly 1.71 billion; and the exposed data includes critical fields: username, url, email, plain-text password, CPF, CEP, full name, date of birth (DOB). Because the passwords are stored in plain text, they are immediately usable by any malicious actor who gains access.

Importantly, the server was hosted by Cherry Servers (UAB) and appears accessible without appropriate authentication or network segmentation (i.e., a misconfigured open index).

Such unsecured server exposures of Elasticsearch-style stacks have been well-documented: unsecured clusters allow anyone on the internet to perform queries, extract data, and even modify or delete indices. (Elastic)

In one similar case, a misconfigured Elasticsearch instance exposed more than 5 billion records. (Dark Reading)

Given the scale and nature of the data exposed here, this leak qualifies as a high-impact event with far-reaching implications.

Why this unsecured server leak poses such a huge risk

Immediate credential misuse

When plain-text passwords are exposed, attackers do not need to crack anything—they can immediately attempt account logins, credential stuffing, and targeted phishing. Because the dataset includes both email addresses and URLs, the context is ripe for tailored social-engineering campaigns.

Credential reuse and lateral attacks

Many users reuse passwords across multiple services. Attackers who gain access to an exposed dataset often attempt those credentials on banking systems, corporate logins, and other high-value assets. What begins as exposure of one system becomes a cascading risk.

Scale amplifies reach

With over 1.7 billion documents, the dataset likely covers a wide range of users, domains and services. The more credentials exposed, the more incentives for threat actors to package and monetise the data on the dark web.

Automated harvesting & resale

Open Elasticsearch clusters are easy to discover using automated tools (e.g., Shodan) and many breaches result from simple misconfiguration rather than a targeted hack. (Open Raven) Once data is harvested, it quickly appears in criminal marketplaces or is used for credential stuffing-as-a-service.

Regulatory and reputational fallout

Organizations whose data is exposed face regulatory penalties (e.g., GDPR), class-action litigation, and reputational damage. Exposure of usernames/emails/passwords counts as a mass compromise of personally identifiable information (PII).

Thus, this unsecured server leak is not just a technical glitch—it becomes a strategic threat.

How unsecured Elasticsearch instances get exposed

In many cases, the root cause is misconfiguration rather than a zero-day exploit. Typical scenarios include:

- Elasticsearch HTTP/REST API listening on port 9200 (default) and exposed to the internet without authentication. (Open Raven)

- Administrative front-ends (e.g., Kibana) exposed, and while accounts may be protected, the underlying cluster is accessible. (Malwarebytes)

- Lack of network segmentation or firewall rules restricting access only to trusted hosts/VPNs. (Elastic)

- Data originally deployed in “test” mode and later populated with production data but not locked down. (Open Raven)

- Absence of encryption in transit or at rest, no authentication or access controls on indices.

In the case at hand, the description of 1.7 billion documents and plain-text passwords strongly suggests that the cluster was left wide open, enabling full extraction.

What to do if this affects you or your organisation

For end-users whose credentials may have been exposed

- Change the password for the affected account immediately (if you recognise the service or email domain) and every other account where you reused that same password. ✅

- Enable multi-factor authentication (MFA) wherever possible. 🛡️

- Monitor your accounts for unusual activity: login attempts, password resets you didn’t trigger, unfamiliar devices or IPs.

- Use a unique, strong password (or passphrase) for each service and consider using a password manager.

- Be alert for phishing campaigns referencing your email/username and the exposed URL context.

For organisations/responsible parties (data‐controllers or hosting providers)

- Immediately isolate the unsecured server, restrict inbound access (e.g., via firewall or VPC), and disable public HTTP on port 9200 (or make it accessible only via VPN).

- Rotate all exposed credentials and require password resets for potentially impacted users.

- Enable authentication (username/password or API key) for Elasticsearch, enforce TLS encryption and role-based access (see best practice guidance). (Elastic)

- Conduct a forensic review: timeline, access logs, evidence of extraction, data exfiltration indicators.

- Notify the hosting provider (in this case Cherry Servers) and relevant regulatory authority if applicable.

- Provide transparent communication to impacted users/customers: what was exposed, what you’re doing, and what they should do.

- Review and implement a secure configuration baseline for Elasticsearch clusters (lock down default ports, disable open anonymous access, secure snapshot/backups).

- Audit other publicly accessible instances in your estate for similar exposures (there are thousands of open clusters globally). (Security Affairs)

Practical tip & checklist for securing Elasticsearch clusters

Use the following checklist to guide remediation and future prevention:

- Ensure Elasticsearch HTTP API (port 9200) is not publicly accessible; restrict to internal network or VPN.

- Enable built-in security features: authentication enabled, TLS enabled for HTTP & transport layers. (Elastic)

- Use role-based access control: separate systems/accounts for admins vs. applications.

- Encrypt data at rest and in transit; avoid storing plain-text sensitive credentials.

- Regularly scan your estate using tools like Shodan or open-source scanners to detect open clusters. (Open Raven)

- Implement logging and alerting on new index creation, unusual query volumes, bulk extraction attempts.

- Maintain backups, but secure them – do not store backups in anonymous public buckets.

- Perform periodic audits and internal penetration tests of your data-stores and configurations.

- Prepare an incident response plan specific to data-store exposures (e.g., open Elasticsearch, Mongo, Redis).

- Educate your team: misconfiguration is often human error rather than external attack.

Conclusion

The discovery of an exposed server at IP 188.214.129.11 containing 1.7 billion+ documents with usernames, emails, URLs and plain-text passwords is a sharp reminder that misconfigured data stores remain one of the most serious threats to cybersecurity. Whether you are an individual user worried about personal credential exposure or an organisation responsible for safeguarding sensitive systems, this incident underscores the urgent need for vigilance, proper configuration, and rapid remediation.

Don’t wait for an attacker’s footprint to appear—act now.

🔎 Discover much more in our complete guide.

📞 Request a demo NOW.

Your data might already be exposed. Most companies find out too late. Let ’s change that. Trusted by 100+ security teams.

🚀Ask for a demo NOW →Q: What is dark web monitoring?

A: Dark web monitoring is the process of tracking your organization’s data on hidden networks to detect leaked or stolen information such as passwords, credentials, or sensitive files shared by cybercriminals.

Q: How does dark web monitoring work?

A: Dark web monitoring works by scanning hidden sites and forums in real time to detect mentions of your data, credentials, or company information before cybercriminals can exploit them.

Q: Why use dark web monitoring?

A: Because it alerts you early when your data appears on the dark web, helping prevent breaches, fraud, and reputational damage before they escalate.

Q: Who needs dark web monitoring services?

A: MSSP and any organization that handles sensitive data, valuable assets, or customer information from small businesses to large enterprises benefits from dark web monitoring.

Q: What does it mean if your information is on the dark web?

A: It means your personal or company data has been exposed or stolen and could be used for fraud, identity theft, or unauthorized access immediate action is needed to protect yourself.

Q: What types of data breach information can dark web monitoring detect?

A: Dark web monitoring can detect data breach information such as leaked credentials, email addresses, passwords, database dumps, API keys, source code, financial data, and other sensitive information exposed on underground forums, marketplaces, and paste sites.